Climate Doomsday > AI Apocalypse

TLDR

Even if AI advancements continue at the current pace or, somehow, accelerate, energy constraints and climate breakdown are far more likely to derail the AGI future than misaligned superintelligent machines.

What?

Judging by my LinkedIn feed and the buzzword-saturated ads in my podcast stream, there is a robust market for AI FOMO.

“Let’s get straight to the point,” says IBM. “Your company cannot afford to wait any longer.”

“Bring the power of AI to your organization quickly,” says Salesforce. “So that you don’t get left behind.”

“Don’t miss out on the future,” says Slack.”Of AI-powered collaboration!”

Want engagement?

Make office professionals feel like they’re sitting on a horse and buggy watching Betamax tapes while a SELECT FEW geniuses are using super-intelligent robots to get rich as hell.

Obviously, there is a lot of synthetic snake oil here, but the innovations are often real and startlingly useful, once you get past their “look-ma-no-hands” gimmicks.

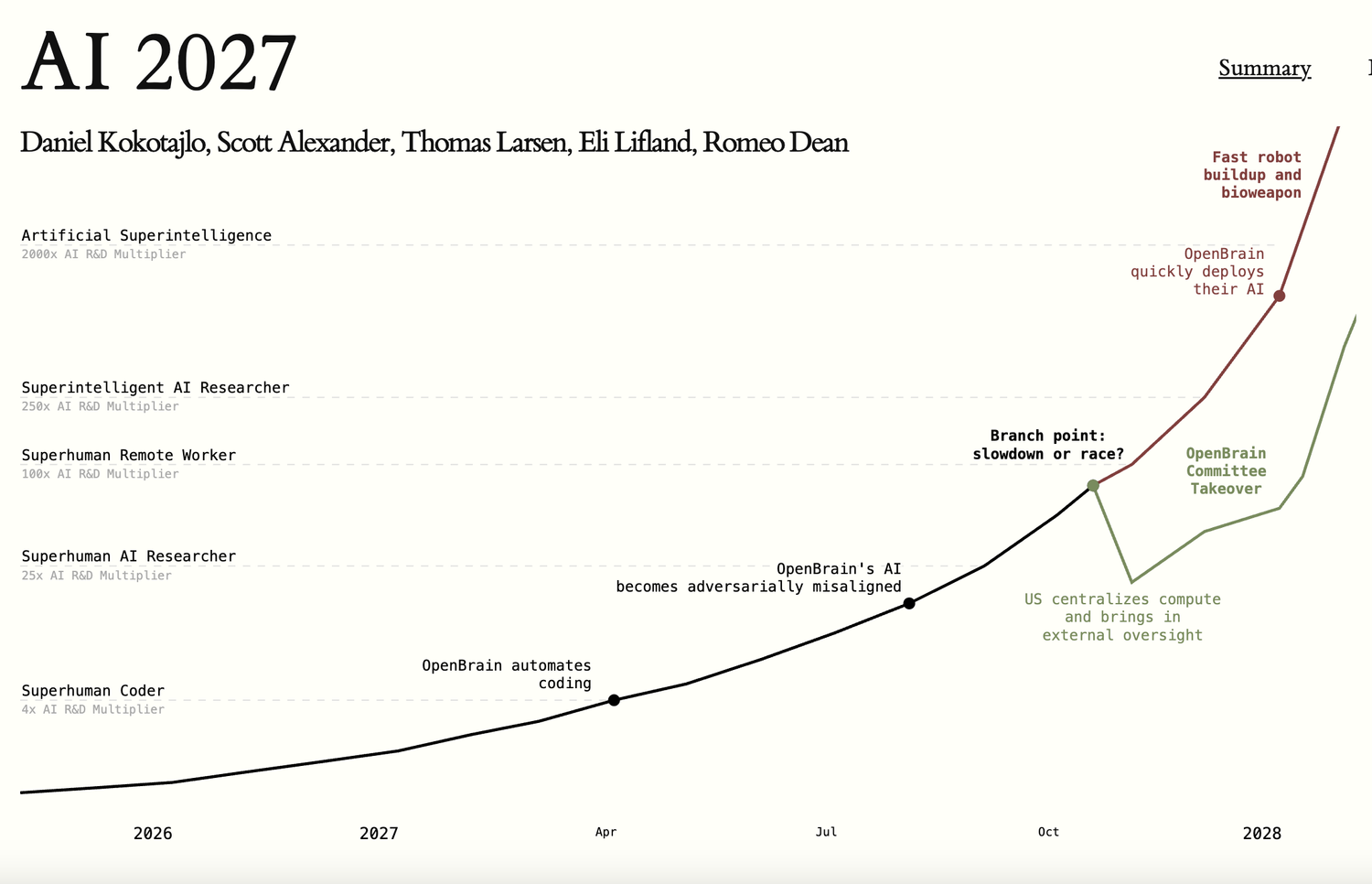

The most plausible near-term roadmap I’ve seen is the one laid out by the AI Futures Project, a group of respected forecasters, writers, and technology workers who recently put together a work of speculative

So What?

This is their prediction for what life looks like next year: "AI has started to take jobs, but has also created new ones. The stock market has gone up 30%..., led by [OpenAI], Nvidia, and whichever companies have most successfully integrated AI assistants. ”The job market for junior software engineers is in turmoil: the AIs can do everything taught by a CS degree, but people who know how to manage and quality-control teams of AIs are making a killing. ”Business gurus tell job seekers that familiarity with AI is the most important skill to put on a resume. Many people fear that the next wave of AIs will come for their jobs; there is a 10,000 person anti-AI protest in DC."

This is just the prelude to their full cinematic timeline, where they present a future-history scenario, grounded in technical plausibility and realpolitik logic, that expands from these near-term breakthroughs to more astonishing world-historical step-changes.

Mosquito drones, global espionage, space colonization, etc.

But all that comes later.

Back in the more plausible sounding 2026, communications, content, and creative humans still have director and manager level positions, but they no longer direct or manage other humans.

Rather, they direct and manage teams of custom GPTs and AI agents that produce replacement-level copy, consolidate existing ideas into slick-looking slide decks, and extract action points from meetings, which are staffed by AI agents from other departments.

The report goes on to present the United States and China as dual AI superpowers racing toward synthetic general intelligence, with a choose-your-own-adventure pair of endings where we either “race” toward a future in which a rogue AI uber-Hal sends drones to kill every human on earth and then colonizes space (sad trombone).

Or toward a “slowdown” future where the sad trombone is muted because people took AI safety seriously enough to establish oversight and kill-switches, but we’re still basically subservient to an AI we can neither understand nor control.

The good news is that these AI doomsday and slightly-less doomsday scenarios are, IMO, unlikely to happen.

The bad news? They’re unlikely to happen because the speculators have severely underestimated the defining constraint of our time: the climate crisis.

Which, perhaps, produces the saddest trombone of them all.

AI2027 uses a straightforward modeling logic that extends current technology trendlines out into the future.

Both of the report’s speculative futures hinge on a 2027 inflection point where an artificial intelligence explosion leads to the development of Artificial General Intelligence operating beyond human control and understanding.

But if we use that same modeling logic to predict climate impacts along the same timeline, we’re more than likely to end up at the IPCC’s climate-shock-energy-grid-whoopsie-doodle future.

In the IPCC’s graph, the 2027 infection point gestures toward a more George Romero world than a Ray Kurzweil one. Even if neither timeline gets it exactly right, the point is, energy use isn’t just a subplot in the AI speculative future.

It’s the plot.

Now What?

AI2027 says peak demand for one “OpenBrain” data center in mid-2026 (before the slop even hits the fan) will be 6GW. Right now, in Northern Virginia, which currently handles 70 percent of the world’s internet traffic, the roughly 300 data facilities there have a combined power capacity of 2,552 MW. So the 2027 future needs these data centers to work 135% over their current capacity, equal to about four new nuclear power plants, or over thirty of the world’s largest current data centers operating at full tilt.

And the energy needs only grow from there.

None of this is compatible with a scenario where we keep warming under three degrees celsius. Which, by the way, is not a good scenario. Add to that the fact that the Northern Virginia power utilities are already reporting multi-year delays in bringing new data centers online — not due to policy, but because the grid is maxed out — and you can see how AI2027’s number-goes-up timeline of AI infrastructure growth and flywheel intelligence breakthroughs becomes a wacky waving inflatable arm-flailing tube man.

Even if OpenAi or xAi or TenCentBrainSeek or whatever does actually manage to capture the city, state, and federal government agencies well enough to gain access to the required power to train their models, actually using that power would exacerbate the climate crisis, leading to cascading shocks hitting the data centers in places like Northern Virginia, Texas, and parts of India.

True, the necessary energy for the intel bonanza could come from a revived nuclear sector, but that would, of course, have its own problems. The most likely scenario, given the whole kakistocracy trend, is one in which energy use is prioritized for Big Tech -- and rationed by everyone else.

In short: no bueno!

So while there’s still a chance for AI 2027’s futures to come about, the most likely bottleneck won’t be misalignment or stolen weights. It will be the ecological cost already baked into humanity’s future. It’s a future grounded in heat, in scarcity, in a power grid held together by fragile cables and unstable clouds.

Yes, it’s still a good idea to learn how to build GPTs and manage future AI agents to collate your Slack and Teams messages (in fact, I have some ideas on that!) but you should also demand the powers that be take meaningful climate action. And you should make sure the Big Tech firms developing AI know that you care about climate as much as you care about seeing videos of Lebron James cuddling with a capybara.

BONUS SPECULATION!

Allow me a moment of my own speculation about what might happen, even if we get as far as some approximation of this 2027:

In the shadows of a fractured, climate-fueled disaster landscape, a Climate Compute Diaspora forms.

They are open-source researchers, locked out of elite corridors, who migrate between low-emission jurisdictions, working with lightweight, efficient large language models.

Instead of investing all their time, energy, and resources into achieving AI supremacy, they invest their real human resources in real human education and developing co-intelligence with the robot weirdos.

These rogue humans don’t scale up — they scale out, laterally, and in doing so, they stumble into a new paradigm: a distributed, hybrid intelligence system that evolves outside the increasingly sclerotic AI monoculture.

They work to solve actual human problems, achieving a stability and a clear focus on multiple, good-enough futures for multiple, good-enough clusters of humanity.

Huh. Maybe that future could even start right now?

All typos are the fault of the machine overloards

Sources:

https://ai-2027.com/#narrative-2026-12-31

https://www.theverge.com/2023/8/29/23849056/google-meet-ai-duet-attend-for-me

https://www.ipcc.ch/report/ar6/wg1/downloads/report/IPCC_AR6_WGI_Chapter04.pdf

https://www.wri.org/insights/climate-change-effects-cities-15-vs-3-degrees-C

https://www.texastribune.org/2021/12/14/winter-weather-texas-climate-change/