Trump is spying on Reddit users, research shows America believes conspiracy theories, chip wars, and more

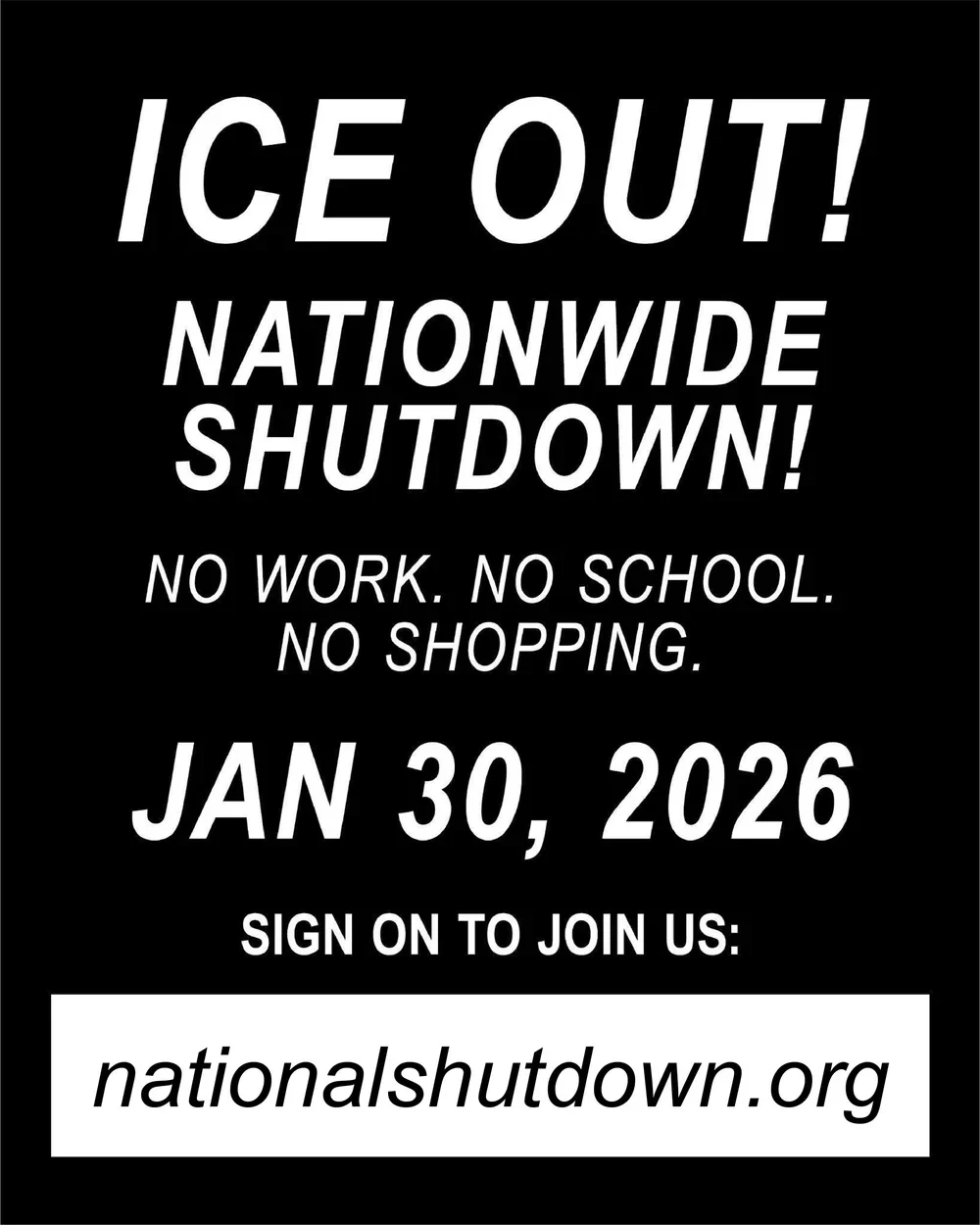

Nearly half of Americans believe false claims, Trump sanctions U.N. and ICC staff, GOP fast-tracks the SAVE America Act, Homeland Security monitors Reddit, a $55 billion contract for domestic military bases raises alarms. ICE neglects trans immigrants, AI spending causes national labor shortages, the EU targets TikTok's "addictive design," Texas fracking threatens floodplains, Trump reopens the Atlantic monument to fishing, massive hidden methane emissions, Substack profits from Nazi newsletters, and the FCC probes "The View."

Is This AI?

Being “against AI” is like being “against” a technology, like, on a simple machine level, the screw. But, in the same way we can be “pro-screw” and yet “anti-getting-screwed,” we can be “pro-AI” while at the same time “anti-getting-screwed-by-AI.” Here’s how.

Bovino Booted from Bar, Crypto Spirals, ICE Drops Death Cards

Bitcoin plunges as $2 trillion vanishes from crypto markets, Border Patrol's Gregory Bovino is booted from a Vegas bar, ICE agents leave "death cards" for immigrants, and the National Park Service erases "racist" references from Medgar Evers' history. Hillary Clinton dares James Comer to a public hearing, Antifa threats lead to a Minneapolis arrest, and Jeff Bezos-funded Melania documentary sets suspicious Rotten Tomatoes records.

The Latest on the Tulsi Gabbard Whistleblower Complaint, Veggie Tales, and America Doesn’t Believe ICE

January 2026 layoffs hit highest levels since 2009 as Trump's ICE tactics face backlash. Supreme Court upholds California redistricting while Utah probes GOP signature fraud. Stephen Miller expands immigration enforcement. AI music banned in Sweden as UN warns of water bankruptcy.

Two new ways Trump is going after your data

Trump administration weaponizes DHS administrative subpoenas against critics, forcing tech companies to surrender user data without judicial oversight. Patriot Front infiltrated by antifa activist. Ray Dalio warns of global capital war at Dubai summit. Mark Kelly challenges Pentagon over illegal orders video. Netflix CEO Ted Sarandos testifies on Warner Bros Discovery merger amid "woke" accusations. Education Department's Office for Civil Rights layoffs cost taxpayers $28-38 million. OpenAI pivots from research to ChatGPT commercialization, triggering senior staff exits.

What right-wing America is learning from Franco, more Epstein, less ICE

Trump faces dipping Pew Research scores as ICE expands warrantless arrests. Protests erupt for Alex Pretti while Mike Johnson eyes a DHS budget deal. Anthropic fights the Pentagon on AI, Melania debuts a film, and nurses lead Gallup ethics.

Epstein, Gabbard, Melania: A Special Daily Intel Weekend Edition

ICE raids stall GOP in Minnesota, Trump targets Cuba, The latest Epstein file analysis, the FBI raids Fulton County as perhaps part of an attempt to tie the 2020 election to Maduro, millions protest ICE, and more.

Residents of Minneapolis Nominated for Nobel

A 512-million-year-old 'fossil bonanza,' a Columbian bot farm glitch. Trump weighs airstrikes on Iran, Signal conspiracies bloom, Georgia proposes 'Trump Mountain,' Alex Pretti's shooters go on leave, the FBI raids Fulton County for ballots, half of the public wants to defund ICE. Zuck targets superintelligent social media, a bot farm glitch exposes political manipulation in Colombia, Trump fast-tracks oil in national forests, the High Seas Treaty takes effect, CBS News cuts veteran staff, The Boss releases a protest song, The Nation nominates Minneapolis residents for the Nobel Peace Prize, and new research reveals Mars had an Arctic-sized ocean three billion years ago.